Inside The Witcher 4 tech demo: the CD Projekt Red and Epic interview

Unreal Fest 2025 kicked off with an impressive demonstration of how The Witcher 4 developers CD Projekt Red are getting to grips with Unreal Engine 5. The 14-minute tech demo features lush forest landscapes, detailed character rendering and impressive hardware RT features, all running at 60fps on a base PlayStation 5. It’s one of the most visually ambitious projects we’ve seen for current-gen consoles even at this early stage, and we wanted to learn more about how the demo was created.

To find out, Digital Foundry’s Alex Battaglia took a trip to CDPR’s offices in Warsaw and spoke to key figures at CD Projekt Red – including Charles Tremblay, VP of technology; Jakub Knapik, VP of art and global art director; Kajetan Kapuscinski, cinematic director; Jan Hermanowicz, engineering production manager – as well as Kevin Örtegren, lead rendering programmer at Epic Games.

A selection of questions and answers from the interview follows below. As usual, the text has been slightly edited for clarity and brevity. You can see the full interview via the video embedded below. Enjoy!

When did the cooperation between CDPR and Epic begin for The Witcher 4 tech demo, given the announcement of The Witcher going to Unreal in 2022?

Jan Hermanowicz: That will be about three years by now. When it comes to this particular demo, it’s sometimes hard to draw a line, but this is a relatively fresh thing that we started working on somewhere last year.

Why did CDPR switch from RedEngine to Unreal Engine in 2022?

Charles Tremblay: I get this question often, and I always preface it by saying that I don’t want people to think the tech we had was problematic – we’re super proud of what we achieved with Cyberpunk. That being said, when we started the new Witcher project, we wanted to be more of a multi-production company, and our technology was not well made for that. It was one project at a time, put the gameplay down, then move on. Second, we wanted to extend to a multiplayer experience, and our tech was for a single-player game. So we decided to partner with Epic to follow the company strategy.

Seeing the Witcher 4 demo running first on PS5, it goes against the grain of what CDPR has done in the past in terms of its PC-first development and PC-first demos for Cyberpunk 2077 and The Witcher 3. So why target PS5 at 60fps?

Charles Tremblay: When we started the collaboration, we had super high ambition for this project. As you said, we always do PC, we push and then we try to scale down. But we had so many problems in the past that we wanted to do a console-first development. We saw it would be challenging to realise that ambition on PS5 at 60fps, which is why we started to figure out what needs to be done with the tech. We have all our other projects at 60fps, and we really wanted to aim for 60fps rather than going back to 30fps.

Jan Hermanowicz: We had a mutally shared ambition with Epic about this, that this was the first pillar we established.

Kevin Örtegren: It was a really good opportunity for the engine as well, to use this as a demo and showcase that 60fps on a base console is achieveable with all the features that we have.

Do you see 60fps as a challenge or a limitation?

Charles Tremblay: We’re perfectly aware that we still have a lot of work ahead of us – this is a tech demo; the whole gameplay loop isn’t implemented, there’s no combat and there’s a lot of things that don’t work. But still, the ambition is set. It’s too early to say if we’ll nail it, but we’ll work as hard as we can to make it, for sure.

How did you manage to get hardware Lumen running at 60fps on console, when almost all other implementations are at 30fps?

Kevin Örtegren: It comes down to performance and optimisations on both the GPU side and CPU side. On the CPU, there’s been a ton of work on optimising away a lot of the cost on the critical path of the render thread: multi-threading things, removing all sync points we don’t need, allowing all types of primitives to actually time slice… On the GPU, tracing costs need to be kept low, so having good proxies and streaming in the right amount of stuff in the vicinity… making this work out of the box is core to that 60fps.

Why target 60fps with hardware Lumen when the software path exists and runs faster?

Kevin Örtegren: The software path has a lot of limitations, things that we simply cannot get away from, no matter how hard we try. The distance field approximation is effectively static, right, and the more dynamic worlds we build, we want that to also be part of the ray tracing scene. So using hardware RT is much better quality-wise, we can get much better repesentation with RT than with distance fields. Generally, it is also kind of the future, so we’re focusing on hardware Lumen and we consider software Lumen to hopefully be a thing of the past.

Jakub Knapik: Looking at it from a Witcher point of view, this game will have a dynamic day/night cycle, so you need to secure the environments lighting-wise for all light angles, and it’s an open world game, so you need to make sure the way you make content will work and it will not light leak in all those situations. Hardware Lumen is much better for securing this. And like Kevin said, you can actually move trees and have proper occlusion.

For us, going with software Lumen would have a lot of limitations that would kill us from a production point of view; otherwise we’d have to change the design of the game.

Kevin Örtegren: It’s a good point. If you do software Lumen on one platform, but you want to scale up to hardware Lumen on another platform, working with both is problematic. You want to have the one representation, it’s much better.

What effect does having RTGI and RT reflections on consoles as baseline tech have on art design?

Jakub Knapik: It was challenging to find a middle ground artistically with Cyberpunk so that it works on both consoles and high-end PCs. With this approach, we only have to alter the game once, and we can make sure it’s visually similar – it just gets better – and the art direction is consistent on all platforms.

Can you explain what it was like using Lumen for the first time in cinematics?

Kajetan Kapuscinski: The tools we were provided from Epic and the tools we’re co-developing with them open up a lot of possibilities to have creative freedom and create the things you’ve now seen in the beginning of the demo. It’s liberating in many ways.

Jakub Knapik: There are many aspects to the look, apart from Lumen, that we actually introduced in this demo – like lens simulation, film simulation, ACES tone mapping, all of that stuff we also added when working on the technology for The Witcher. So that all contributes to a slightly more effortless approach to scenes.

How were the world and terrain created for this demo?

Jan Hermanowicz: The pipeline we’re using for this is actually the pipeline for the main game, so there’s ideation and then landscape creation within DCC tools. We do the first pass outside of the engine, then import that height map into the engine, then do the rest of the sculpting in Unreal Engine. That’s purely the terrain; what you see is a layered picture with meshes like additional rock formations, trees, that sort of stuff, there’s a procedural (PCG) layer. Effectively, we replace the auto grass with the runtime GPU-based PCG, and we use that for the small debris, trash, grass and stuff like that.

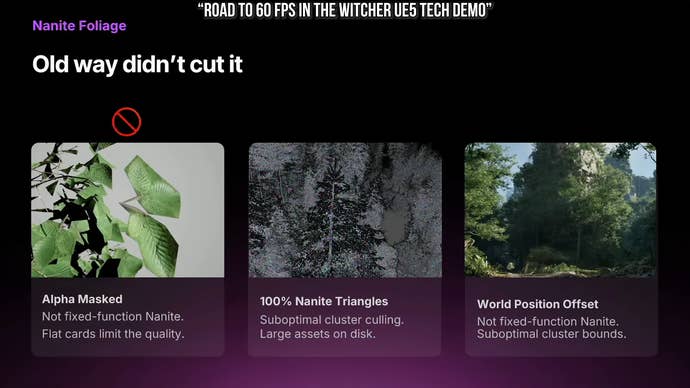

How did the team view the paradigm shift in how vegetatation is made? After all, it’s been done with alpha cutout cards since I was a child!

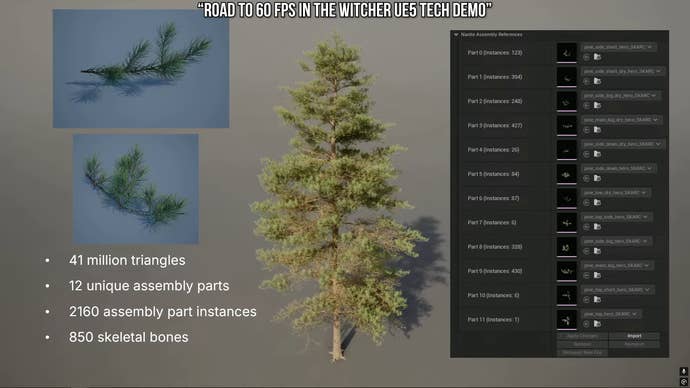

Jakub Knapik: I think that combo of Nanite foliage plus PCG is a killer combo. Creating big trees is one problem, creating small foliage is another problem, and with this demo we tried to combine both techniques. Having big moveable trees that are illuminated properly was our biggest concern, because if you have a static tree, that’s an approachable problem. If you have a moving tree, that’s really hard.

I remember being in a conversation with our art director, Lucjan Więcek, and saying to him “you can have good lighting, or moving trees”. It’s hard to have both. There was a lot of effort from CDPR’s and Epic’s tech teams to solve that problem. That was by far the biggest change and concern we had with The Witcher.

Kevin Örtegren: As you said, alpha cutout cards has been the technique for many, many years. But it doesn’t really cut it – it’s flat, so it looks good from a certain angle but not every angle, shadows might be problematic as well. Throwing geometry at it is the only way to make it real volumetric.

Jan Hermanowicz: Exactly, and it opens up new possibilities for artists. So, for example, the pine needs that you see up-close in the demo is a perfect case for geometry. It requires some change of thinking among foliage artists, but the possibilities outweighed any new challenges.

Charles Tremblay: The reason we had the pine tree is because we thought it was the worst case scenario, and we worked on it for a long time. I was super stresed when we started work on the demo, and we had to consider also the asset space on disk, all the assemblies… In The Witcher, the forest is the soul of the game, so it couldn’t be done the traditional way.

Jan Hermanowicz: One of the best days was finding out this crazy amount of polygons without alpha actually ran faster than the classic cards approach.

Do you see this approach also working on other areas of rendering?

Kevin Örtegren: It’s possible, we’ve discussed it. The voxel idea isn’t actually all that new, Brian Karis who came up with nanite, had an HPG talk with a section on voxels a few years ago… at the time, it wasn’t a perfect fit, but it turns out it was actually a very good fit for foliage. So anything that looks like foliage might be a contender to use this tech.

How does this voxel-based approach to foliage fit into the classic lighting pipeline? How is everything lit and shaded?

Kevin Örtegren: They actually fit in every nicely – part of the standard Nanite pipeline is replaced by the voxel path, and that same path runs for VSMs. That’s why it’s kind of cheap to render into shadows in the distance, because they’re just voxels – that just works out of the box. Lighting-wise, it’s regular directional light, with improvements to the foliage shading model, on the indirect side, we have a simplified representation which is static for performance reasons, so it scales up.

How did you get virtual shadow maps (VSMs) running at 60fps when that’s relatively rare for shipping UE5 games on console?

Kevin Örtegren: There’s been a lot of work for a long time on improving performance in VSMs; I think a lot of times, developers turn it off because they have a lot of non-Nanite geometry. Obviously the settings are important as well, you can’t go with the highest resolution and highest LOD bias; here with the demo, we have a sensible setup. You can see some flickering on skin and some surfaces from lower-resolution shadows, but it works for us.

How was the demo’s flawless frame-rate achieved? I know you’re using triple buffering, but how does it work?

Kevin Örtegren: First of all, the average frame-time has to be reasonable, and we use dynamic resolution scaling to make sure we’ve got an achievable 60fps on every frame. Then we have the camera cuts, where we lose the history and we have to re-render a lot of stuff. Overdraw is massive on that first frame, and spikes can be 10ms or more and you can drop a frame.

Tackling that required an optimisation to prime that data so we have something to cull against, which brings spikes down significantly. In cases where we’re going above 16.6ms anyway, given we don’t have a super low latency mode enabled, we actually have quite a bit of a buffer zone. If one frame goes over, but the next one doesn’t, you can start to catch up and not drop that frame. Essentially if by the time you submit your work from the CPU to the GPU – the time that work has to finish before the next present – you have two or three frames of buffer to eat those hitches.

If you look at the demo more closely, you can see that the first frame is pretty good after the camera cut, and that’s because we render two frames before the next present, and then just discard one of them. The first one provides the history for the second one, which means that second one looks much better. So if we manage our frame times well enough, we can get away with that.

Would this technique work in other games? If there was a button in the options that said “smooth cutscenes”, I’d always click it.

Kevin Örtegren: There are two options here, one being the way we sync the game thread to the GPU – there are already options for that. You can do really low latency stuff, you can sync with the presents. Or you can sync your game thread to the render thread, then you get a bit of a pipeline going which smooths things out. Then you can select double buffering or triple buffering.

Jan Hermanowicz: There’s some work we did on the game thread side of things, on the CPU side. We uploaded as much as we could to async, so it can be computed over time, and Unreal animation framework also helps a lot because it moves a lot of animations to the other threads. Plus we’re now smoothly streaming geometry with FastGeo in this demo, so we’re not loading big chunks of a world. Plus, it requires some strategising, we know our world – in the demo and in the full game – and we know when it’s a good time to start loading certain things so that it isn’t just like “oh, it happened!” and there’s a hitch. You can’t predict everything, but having this thought process is an important part of this.

How would CDPR potentially scale graphics to platforms more powerful than the base PS5, eg PS5 Pro or PC?

Jakub Knapik: This is one of the topics that we’re currently discussing. We said before that we wanted to start with the PS5 as the base and that it would be easier to scale up than down. We know that Lumen and these other technologies are providing pretty consistent representation across the scale. What it means exactly is another question – we’re CDPR, we always want to push PCs to the limit. It’s a creative process to decide how to use it. What it means for sure is that we’re going to expand all of the ray tracing features forward.

Kevin Örtegren: It’s another really good argument for hardware Lumen. If you start there, you can scale up easily and add super high-end features like MegaLights.

Charles Tremblay: I don’t want to go into too much detail, and don’t want to over-promise, but it’s something that’s super important to us, if people pay good money for hardware, we want them to have what the game can provide, not a simplified experience. The company started as a PC company, and we want to have the best experience for the PC gamer. But it’s too early to say what it’ll mean for The Witcher 4.

There’s also the Xbox Series S. What would it take to get this demo running on something with less memory and less GPU resources?

Charles Tremblay: I wish we had already done a lot of work on that, but we have not. This is something that’s next on our radar for sure. I would say that 60fps will be extremely challenging – it’s something we need to figure out.

The interview continues beyond this question, but due to time and space constraints we’ll conclude things there. Please do check out the full video interview above. Thanks to our panellists at CDPR and Epic for contributing their time and expertise.

Source link